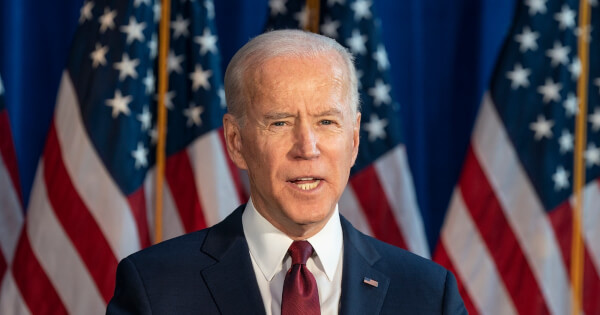

Biden-Harris Administration Secures AI Commitments from Major Tech Companies

Luisa Crawford Sep 12, 2023 11:58

The Biden-Harris Administration has secured voluntary commitments from eight more artificial intelligence (AI) companies to manage AI risks. The commitments emphasize safety, security, and trust, requiring AI products to undergo security testing, share information on risk management, prioritize cybersecurity, and develop mechanisms to inform users. The administration is developing an Executive Order on AI and pursuing bipartisan legislation to position America as a responsible AI developer.

In today's press release from the White House, the Biden-Harris Administration announced that it has secured voluntary commitments from eight more artificial intelligence (AI) companies to manage the risks associated with AI. This move builds upon the commitments from seven AI companies obtained in July.

Companies Involved

The latest round of commitments includes major tech players such as Adobe, Cohere, IBM, Nvidia, Palantir, Salesforce, Scale AI, and Stability. These companies have pledged to drive the safe, secure, and trustworthy development of AI technology.

Nature of Commitments: The commitments emphasize three core principles for AI's future: safety, security, and trust. The companies have agreed to:

- Ensure AI products undergo both internal and external security testing before public release.

- Share information on managing AI risks with the industry, governments, civil society, and academia.

- Prioritize cybersecurity and protect proprietary AI system components.

- Develop mechanisms to inform users when content is AI-generated, such as watermarking.

- Publicly report on their AI systems' capabilities, limitations, and areas of use.

- Prioritize research on societal risks posed by AI, including bias, discrimination, and privacy concerns.

- Develop AI systems to address societal challenges, ranging from cancer prevention to climate change mitigation.

Government Action

These voluntary commitments are seen as a bridge to forthcoming government action. The Biden-Harris Administration is in the process of developing an Executive Order on AI to ensure the rights and safety of Americans. The Administration is also pursuing bipartisan legislation to position America as a leader in responsible AI development.

International Collaboration: The Administration has consulted with numerous countries, including Australia, Brazil, Canada, France, Germany, India, Japan, and the UK, among others, in developing these commitments. This international collaboration complements initiatives like Japan’s G-7 Hiroshima Process and the United Kingdom’s Summit on AI Safety.

Previous Initiatives

The Biden-Harris Administration has been proactive in addressing AI's challenges and opportunities. Notable actions include:

- Launching the "AI Cyber Challenge" in August to use AI in protecting crucial US software.

- Meetings with consumer protection, labor, and civil rights leaders to discuss AI risks.

- Engagements with top AI experts and CEOs from companies like Google, Microsoft, and OpenAI.

- Publishing a Blueprint for an AI Bill of Rights and ramping up efforts to protect Americans from AI risks, including algorithmic bias.

- Investing $140 million to establish seven new National AI Research Institutes.

The Administration's consistent efforts underscore its commitment to ensuring that AI is developed safely and responsibly, safeguarding Americans' rights and safety, and protecting them from potential harm and discrimination.

Disclaimer & Copyright Notice: The content of this article is for informational purposes only and is not intended as financial advice. Always consult with a professional before making any financial decisions. This material is the exclusive property of Blockchain.News. Unauthorized use, duplication, or distribution without express permission is prohibited. Proper credit and direction to the original content are required for any permitted use.

Image source: Shutterstock.jpg)